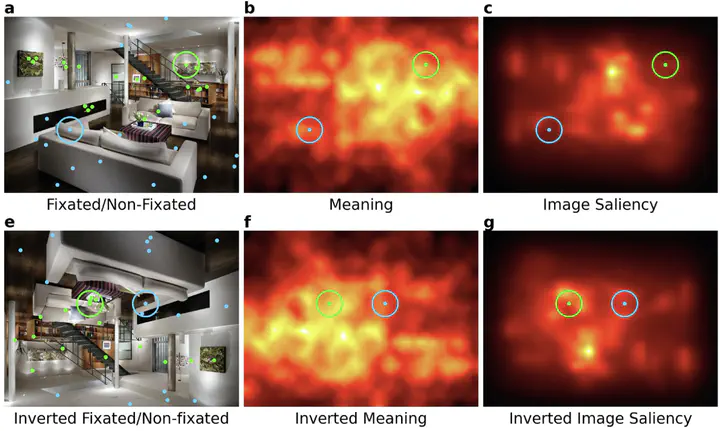

Scene inversion reveals distinct patterns of attention to semantically interpreted and uninterpreted features

Abstract

Semantic guidance theories propose that attention in real-world scenes is strongly associated with semantically informative scene regions. That is, we look where there are recognizable and informative objects that help us make sense of our visual environment. In contrast, image guidance theories propose that local differences in semantically uninterpreted image features such as luminance, color, and edge orientation primarily determine where we look in scenes. While it is clear that both semantic guidance and image guidance play a role in where we look in scenes, the degree of their relative contributions and how they interact with each other remains poorly understood. In the current study, we presented real-world scenes in upright and inverted orientations and used general linear mixed effects models to understand how semantic guidance, image guidance, and observer center bias were associated with fixation location and fixation duration. We observed distinct patterns of change under inversion. Semantic guidance was severely disrupted by scene inversion, while image guidance was mildly impaired and observer center bias was enhanced. In addition, we found that fixation durations for semantically rich regions decreased when viewing inverted scenes relative to upright scene viewing, while fixation durations for image salience and center bias were unaffected by inversion. Together these results provide important new constraints on theories and computational models of attention in real-world scenes.