I study how the brain processes the visual world.

Taylor R. Hayes is a project scientist at the Center for Mind and Brain at the University of California, Davis. He studies how humans use attention to process and understand complex, real-world scenes. In his research he combines eye tracking and behavioral data with computational approaches to study the roles of scene semantics, image features, viewing task, and individual differences during active scene viewing.

Academic CV

- Attention

- Scene Perception

- Vision Language Models

- Eye Movements

- Pupillometry

PhD Cognitive Psychology, 2015

The Ohio State University

MA Cognitive Psychology, 2011

The Ohio State University

BA Philosophy, 2006

The Ohio State University

Featured Publications

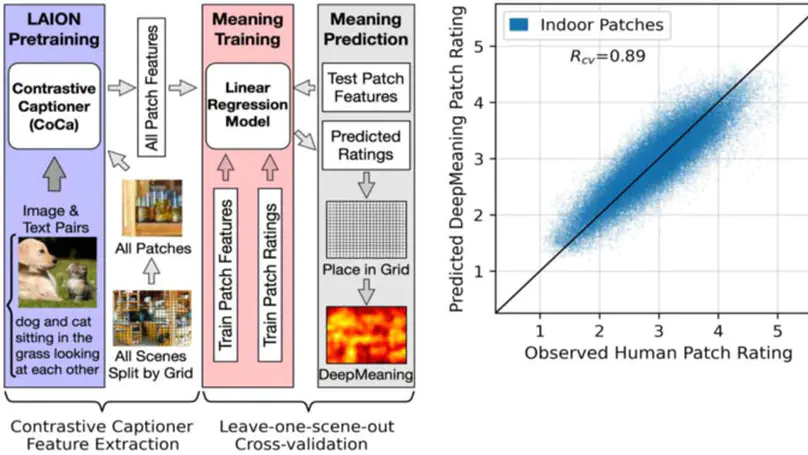

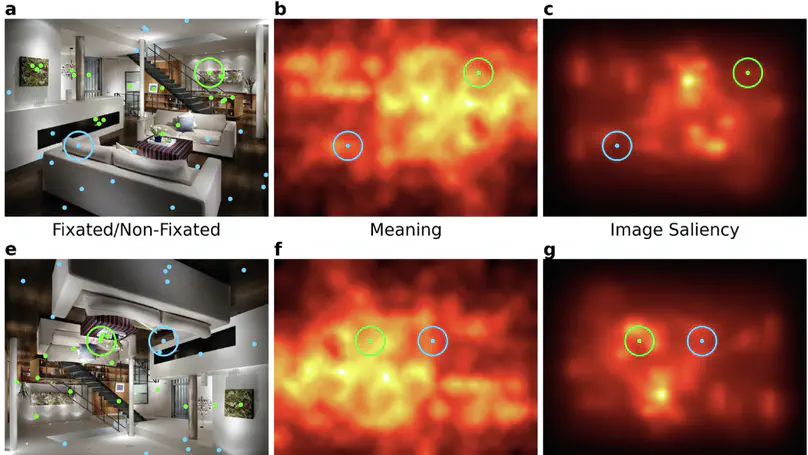

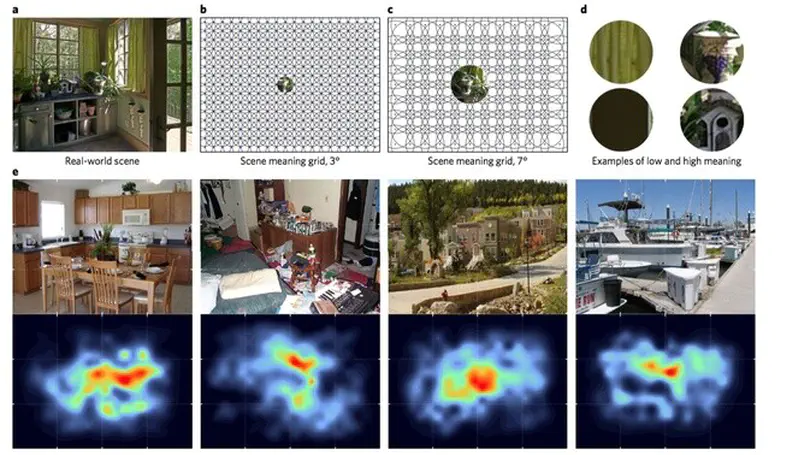

This study uses a vision-language transformer trained on billions of image-text pairs to investigate how local scene meaning guides human attention. ‘DeepMeaning’ automatically estimates semantic content in real-world scenes, predicts gaze patterns, detects semantic changes, and offers interpretable language-based insights. These results show that multimodal transformers can serve as powerful tools for linking vision and language to test theory on the role of scene semantics in attentional guidance.

We presented real-world scenes in upright and inverted orientations and used general linear mixed effects models to understand how semantic guidance, image guidance, and observer center bias were associated with fixation location and fixation duration. We observed distinct patterns of change under inversion. Semantic guidance was severely disrupted by scene inversion, while image guidance was mildly impaired and observer center bias was enhanced. In addition, we found that fixation durations for semantically rich regions decreased when viewing inverted scenes, while fixation durations for image salience and center bias were unaffected by inversion. Together these results provide important new constraints on theories and computational models of attention in real-world scenes.

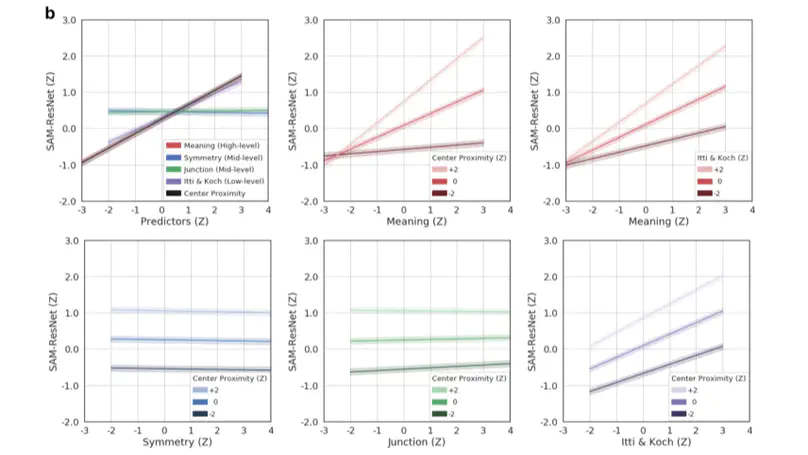

Deep saliency models represent the current state-of-the-art for predicting where humans look in real-world scenes. However, for deep saliency models to inform cognitive theories of attention, we need to know how deep saliency models prioritize different scene features to predict where people look. Here we open the black box of three prominent deep saliency models (MSI-Net, DeepGaze II, and SAM-ResNet) using an approach that models the association between attention, deep saliency model output, and low-, mid-, and high-level scene features.

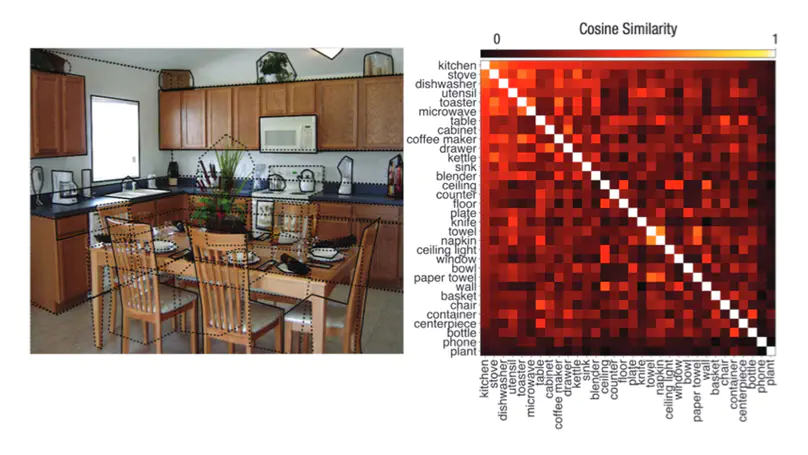

Object semantics are theorized to play a central role in where we look in real-world scenes, but are poorly understood because they are hard to quantify. Here we tested the role of object semantics by combining a computational vector space model of semantics with eye tracking in real-world scenes. The results provide evidence that humans use their stored semantic representations of objects to help selectively process complex visual scenes, a theoretically important finding with implications for models in a wide range of areas including cognitive science, linguistics, computer vision, and visual neuroscience.

Real-world scenes comprise a blooming, buzzing confusion of information. To manage this complexity, visual attention is guided to important scene regions in real time. What factors guide attention within scenes? A leading theoretical position suggests that visual salience based on semantically uninterpreted image features plays the critical causal role in attentional guidance, with knowledge and meaning playing a secondary or modulatory role. Here we propose instead that meaning plays the dominant role in guiding human attention through scenes.

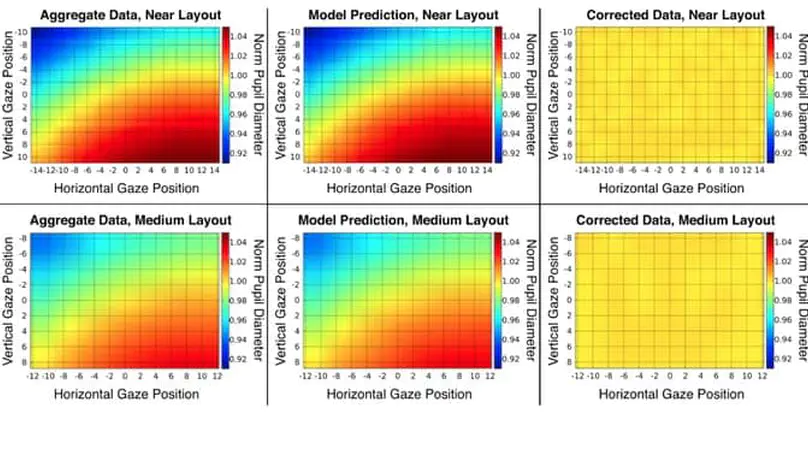

Pupil size is correlated with a wide variety of important cognitive variables and is increasingly being used by cognitive scientists. One serious confound that is often not properly controlled is pupil foreshortening error (PFE)—the foreshortening of the pupil image as the eye rotates away from the camera. Here we systematically map PFE using an artificial eye model and then apply a geometric model correction.

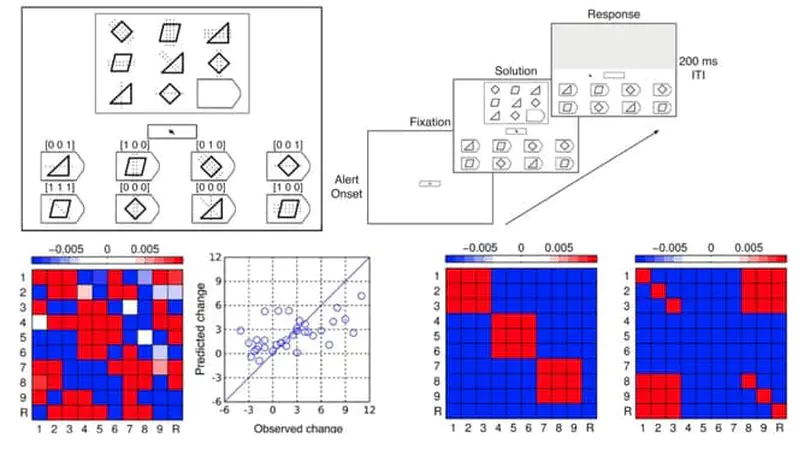

Recent reports of training-induced gains on fluid intelligence tests have fueled an explosion of interest in cognitive training-now a billion-dollar industry. The interpretation of these results is questionable because score gains can be dominated by factors that play marginal roles in the scores themselves, and because intelligence gain is not the only possible explanation for the observed control-adjusted far transfer across tasks. Here we present novel evidence that the test score gains used to measure the efficacy of cognitive training may reflect strategy refinement instead of intelligence gains.